We held our annual ACE-CSR event on 2 November 2016. This post and the title is drawn from the second talk by Dr Jens Krinke, a senior lecturer in the Centre for Research on Evolution, Search and Testing, and the Software Systems Engineering group at UCL, who outlined work he conducted with Pengfei Wang that found flaws in the Linux kernel.

The work Krinke presented, completed over the last year, revolves around double-fetch problems, which got their name in 2008 in a study that found exploitable vulnerabilities in the Windows kernel. In an updated study in 2013, Mateusz “j00ru” Jurczyk and Gynvael Coldwind produced a paper, which examined the Windows kernel and found 89 potential issues, 36 of which were highly exploitable. Most of the paper focused on how to exploit these bugs; today, if you find such a vulnerability you can download an exploit from Github.

Many of these vulnerabilities have now been fixed, but Jurczyk and Gynvael’s analysis was extremely slow, because they essentially simulated Windows over and over again, with the result that it took them 15 hours just to get to the boot screen. Because the analysis was dynamic, they were only able to cover a subset of the drivers present in the system, and they said outright that they knew there were other vulnerabilities present. Krinke was sure there were vulnerabilities of this type in Linux, but no one had audited the Linux kernel in detail.

Krinke and Wang wanted to audit Linux, looking at the source code directly and including all the drivers – which was essential, because, as it turned out, roughly 80% of the problems they found were in the drivers.

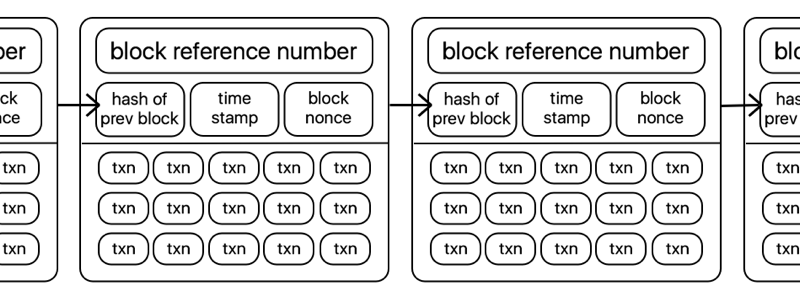

Double-fetch problems are memory access conflicts that arise in the interface between the operating system kernel and an application when data is changed between the time it’s checked and the time it’s actually used. It works this way: every process a user starts has its own virtual memory space that is isolated from other user memory spaces; only the kernel can see all memory spaces. However, there must be interaction, and this is done through system calls. So, a user selects some data and asks the system to operate on it. The data remains in the user space but the system copies it to the kernel and checks the data is valid before using it. The upshot is that the kernel may fetch the data twice: once for checking and once for use. A malicious application that intercedes between those two fetches and changes the data after it’s been checked but before it’s used can cause system crashes.

If an error like this arises outside of the operating system, it causes software errors, but this affects only you; when it happens in the operating system, an attacker can take over the complete system.

Analysing this process is extremely hard, especially because exactly what’s occurring is unknown beforehand. Krinke and Wang instead created a two-step analysis. First, a pattern-based double-fetch analysis based on Coccinelle examined the source code to find occurrences; these were then manually analysed to determine how the data was used and what the consequences were. The results were divided into three categories: size checking; type selection; and shallow copy. In the 40,000 source code files comprising Linux, they found 90 such situations, of which 57 were in drivers, five were true bugs. All of them have been confirmed and three are exploitable vulnerabilities. In checking back, Krinke and Wang found that some of these bugs are at least ten years old.

Krinke and Wang also applied their analysis also to Android (a variant of Linux) and FreeBSD. The latter, which is more security-oriented in design, had no problems. Android had only three bugs, but they also found a problem that had been fixed in Linux (but not in Android). After studying these three operating systems with Coccinelle, they can say that after these bugs have been fixed, that all three are likely to be free of double-fetch vulnerabilities. As the bug was in the file system, Android apps with native binary code that interacts with the phone at the file system level can also exploit it.