The opening event for the UCL Academic Centre of Excellence for Cyber Security Research in the 2015–2016 academic term featured three speakers: Earl Barr, whose work on approximating program equivalence has won several ACM distinguished paper awards; Mirco Musolesi from the Department of Geography, whose background includes a degree in computer science and an interest in analysing myriad types of data while protecting privacy; and Susan Landau, a professor at Worcester Polytechnic Institute and a visiting professor at UCL and an expert on cyber security policy whose books include Privacy On the Line: the Politics of Wiretapping and Encryption (with Whitfield Diffie) and Surveillance or Security? The Risks Posed by New Wiretapping Technologies.

Detecting malware and IP theft through program similarity

Earl Barr is a member of the software systems engineering group and the Centre for Research on Evolution, Search, and Testing. His talk outlined his work using program similarity to determine whether two arbitrary programs have the same behaviour in two areas relevant to cyber security: malware and intellectual property theft in binaries (that is, code reused in violation of its licence).

Barr began by outlining his work on detecting malware, comparing the problem to that facing airport security personnel trying to find a terrorist among millions of passengers. The work begins with profiling: collect two zoos, and then ask if the program under consideration is more likely to belong to the benign zoo or the malware zoo.

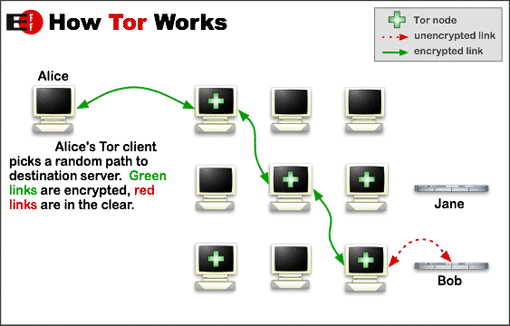

Rather than study the structure of the binary, Barr works by viewing the program as strings of 0s and 1s, which may not coincide with the program’s instructions, and using information theory to create a measure of dissimilarity, the normalised compression distance (NCD). The NCD serves as an approximation of the Kolmogorov Complexity, a mathematical measure of the complexity of the shortest description of an object, which is then normalised using a compression algorithm that ignores the details of the instruction set architecture for which the binary is written.

Using these techniques to analyse a malware zoo collected from sources such as Virus Watch, Barr was able to achieve a 95.7% accuracy rate. He believes that although this technique isn’t suitable for contemporary desktop anti-virus software, it opens a new front in the malware detection arms race. Still, Barr is aware that malware writers will rapidly develop countermeasures and his group is already investigating counter-countermeasures.

Malware writers have three avenues for blocking detection: injecting new content that looks benign; encryption; and obfuscation. Adding new content threatens the malware’s viability: raising the NCD by 50% requires doubling the size of the malware. Encryption can be used against the malware writer: applying a language model across the program reveals a distinctive saw-toothed pattern of regions with low surprise and low entropy alternating with regions of high surprise and high entropy (that is, regions with ciphertext). Obfuscation is still under study: the group is using three obfuscation engines available for Java and applying them repeatedly to Java malware. Measuring the NCD after each application shows that after 100 iterations the NCD approaches 1 (that is, the two items being compared are dissimilar), but that two of the three engines make errors after 200 applications. Unfortunately for malware writers, this technique also causes the program to grow in size. The cost of obfuscation to malware writers may therefore be greater than that imposed upon white hats.

Continue reading ACE-CSR opening event 2015/16: talks on malware, location privacy and wiretap law