Here I describe analysis by myself and colleagues Albesë Demjaha and David Pym at UCL, which originally appeared at the STAST workshop in late 2019 (where it was awarded best paper). The work was the basis for a talk I gave at Cambridge Computer Laboratory earlier this week (I thank Alice Hutchings and the Security Group for hosting the talk, as it was also an opportunity to consider this work alongside themes raised in our recent eCrime 2019 paper).

Secure behaviour in organisations

Both research and practice have shown that security behaviours, encapsulated in policy and advised in organisations, may not be adopted by employees. Employees may not see how advice applies to them, find it difficult to follow, or regard the expectations as unrealistic. Employees may, as a consequence, create their own alternative behaviours as an effort to approximate secure working (rather than totally abandoning security). Organisational support can then be critical to whether secure practices persist. Economics principles can be applied to explain how complex systems such as these behave the way they do, and so here we focus on informing an overarching goal to:

Provide better support for ‘good enough’ security-related decisions, by individuals within an organization, that best approximate secure behaviours under constraints, such as limited time or knowledge.

Traditional economics assumes decision-makers are rational, and that they are equipped with the capabilities and resources to make the decision which will be most beneficial for them. However, people have reasons, motivations, and goals when deciding to do something — whether they do it well or badly, they do engage in thinking and reasoning when making a decision. We must capture how the decision-making process looks for the employee, as a bounded agent with limited resources and knowledge to make the best choice. This process is more realistically represented in behavioural economics. And yet, behaviour intervention programmes mix elements of both of these areas of economics. It is by considering these principles in tandem that we explore a more constructive approach to decision-support in organisations.

Contradictions in current practice

A bounded agent often settles for a satisfactory decision, by satisficing rather than optimising. For example, the agent can turn to ‘rules of thumb’ and make ad-hoc decisions, based on a quick evaluation of perceived probability, costs, gains, and losses. We can already imagine how these restrictions may play out in a busy workplace. This leads us toward identifying those points of engagement at which employees ought to be supported, in order to avoid poor choices.

If employees had full knowledge of how to do the right thing, a behaviour change intervention would not be necessary in the first place. We then use the term bounded security decision-making, to move away from the contradiction of applying behavioural intervention concepts to security while assuming that the targets of those interventions already know how to make the change in behaviour happen.

This assumption leads to some contradictions we observed in current practice:

- Security behaviour provisions assume that the employee (as decision-maker) has resources available to complete training and policies, but in an organisation, the decision-maker is busy with their paid job. For the busy decision-maker, doing security requires a loss to something else, and they often have to find the time and resources themselves.

- The security team in an organisation operates an information asymmetry, where the security team are the experts and employees must be assumed to be (security) novices. Employees then will need to be told the cost of security and exactly what the steps are. Unchecked, this leads to satisficing. And yet, current approaches appeal to the skilful user.

- Advice is given assuming that what is advised is the best choice, and there is no other choice to be articulated. The advocated choice is rarely, if ever, presented alongside other choices (such as previous sanctioned behaviours, or employees’ own, ad-hoc, ‘shadow security’ behaviours).

- Available choices must be edited as systems and technologies change. Otherwise, the decision-maker is often left to do the choice-editing (while at the same being assumed to be novices, as above). In this sense, security behaviour change can rest heavily on effective communication and not just the behaviour itself.

Our framework provides the foundation to explore examples of how to do things better. For instance, employees are often expected to complete security training, but then also have to find the time themselves to complete the training, which would also require them to defend that position to peers and managers when it takes time away from their paid work. A better approach would be for the security team to coordinate with other functions in the organisation – foremost, human resources – to negotiate paid working time in which training can be completed as necessary, and which is recognised by others in the organisation.

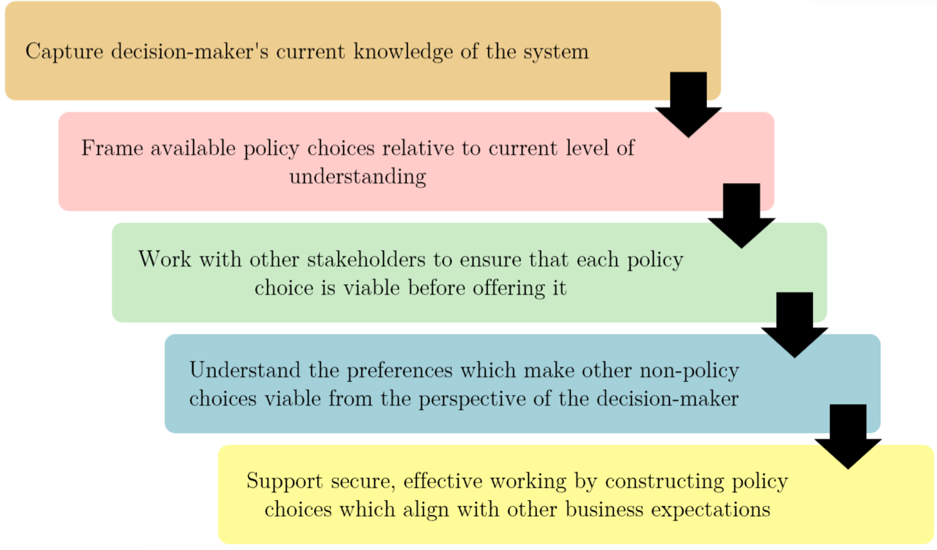

A framework for bounded security decision-making

We applied this framework to a behaviour which is often promoted by experts, to ‘keep your software up-to-date’. Applying the framework, it may be necessary to

- map advice to specific devices and operating systems to establish the effects (and the costs)

- test the updates on devices and software portfolios similar to those used by employees, and communicate specifically the effects of update(s) upon the way the device and its applications will behave (capturing gains and losses)

- declare more precisely when to check for updates, and limit loss aversion by negotiating time allowance for updates to be applied

- most candidly, acknowledge that at the root of frustrations about insecure devices is that ‘not updating’ is an available option, given other pressures upon the employee.

These recommendations all point to where security behaviour interventions need to coordinate with other parts of the business.

My talk at Cambridge Computer Laboratory provided an opportunity to widen the view to publicly-accessible services, and related security behaviours. As above, this can also include issues around up-to-date software and following advised behaviours. There can be satisficing just as in organisations, but also delegation to (informal) technical support (to ‘IT savvy’ people they know or pay, rather than recognised IT-security teams). Information asymmetries then exist but are less distinct and answerable for how they manage devices on behalf of others. Further, some security controls can be harmful despite their perceived benefits, which puts clear expectations on the costs and losses associated with an intervention.

A foundation to support security behaviour interventions

The framework may guide documentation of the ‘security diet’ of employees, to understand where their time is going to security. Toward reducing errors, it is contradictory to promote security as being a serious concern in a way which leaves already-busy employees to try to find the time to make it work, increasing the likelihood of mistakes being made. We anticipate a need to capture the genuine choice architecture, built in part by the security team, and in part by individual employees and their security coping strategies. Capturing the realities of security in the workplace can inform a shift from policy compliance to policy concordance, and behaviours which are resilient to other workplace pressures.