In their 2019 publication ‘Seeing is Not Believing: Camouflage Attacks on Image Scaling Algorithms’, Xiao et al. demonstrated a fascinating and frightening exploit on a few commonly used and popular scaling algorithms. Through what Quiring et al. referred to as adversarial preprocessing, they created an attack image that closely resembles one image (decoy) but portrays a completely different image (payload) when scaled down. In their example (below), an image of sheep could scale down and suddenly show a wolf.

These attack images can be used in a number of scenarios, particularly in data poisoning of deep learning datasets and covert dissemination of information. Deep learning models require large datasets for training. A series of carefully crafted and planted attack images placed into public datasets can poison these models, for example, reducing the accuracy of object classification. Essentially all models are trained with images scaled down to a fixed size (e.g. 229 × 229) to reduce the computational load, so these attack images are highly likely to work if their dimensions are correctly configured. As these attack images hide their malicious payload in plain sight, they also evade detection. Xiao et al. described how an attack image could be crafted for a specific device (e.g. an iPhone XS) so that the iPhone XS browser renders the malicious image instead of the decoy image. This technique could be used to propagate payload, such as illegal advertisements, discreetly.

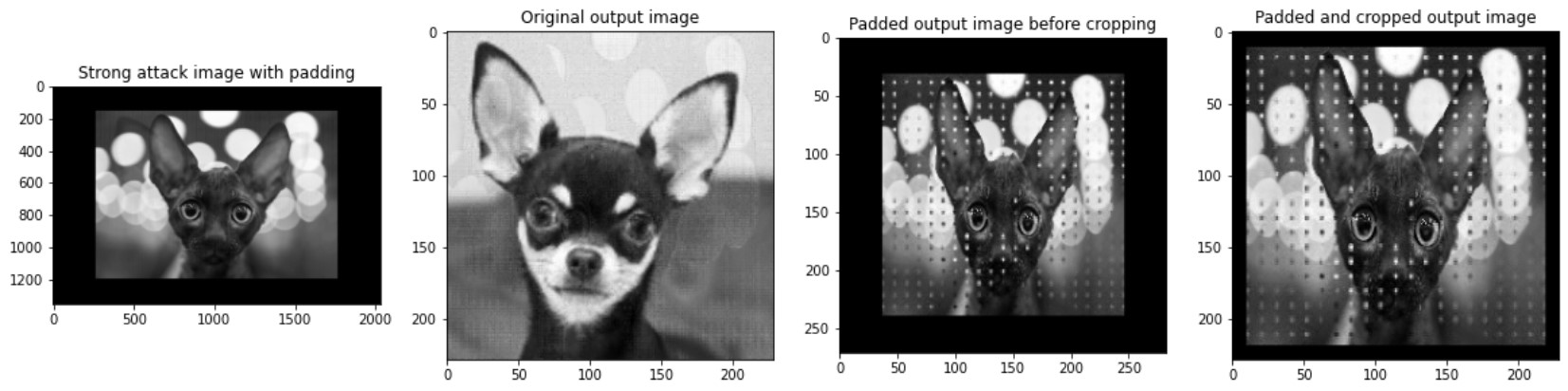

The natural stealthiness of this attack is a dangerous factor, but on top of that, it is also relatively easy to replicate. Xiao et al. published their own source code in a GitHub repository, with which anyone can run and create their own attack images. Additionally, the maths behind the method is also well described in the paper, allowing my group to replicate the attack for coursework assigned to us for UCL’s Computer Security II module, without referencing the paper authors’ source code. Our implementation of the attack is available at our GitHub repository. This coursework required us to select an attack detailed in a conference paper and replicate it. While working on the coursework, we discovered a relatively simple way to stop these attack images from working and even allow the original content to be viewed. This is shown in the series of images below.

The attack exploits the scaling algorithm at a specific input and output resolution, meaning that resizing the attack image to a different resolution than the attacker expected would severely or, in most cases, completely reduce the effectiveness of the attack image. Of course, resizing this to a different, fixed resolution would provide no protection as the attacker could change the configuration of the attack image to match this new resolution. Our preventive method involves adding a random amount of padding to the image, then scaling the image down, leaving a user-defined amount of padding.

By defining a desired amount of padding instead of cropping the image, no information from any legitimate images is lost. Additionally, the random amount of padding eliminates the possibility of any set of attack images reliably working, as the attacker would need to anticipate 4 random padding values, calculated at runtime, for each image to get the attack image to work. This method does not involve any costly metadata analysis, such as a colour histogram comparison between the original and scaled-down image, and does not lose any information around the corner. Unfortunately, this is not a perfect solution as remnants of the payload remain (as seen in the image above). However, it does eliminate the effectiveness of the attack images, neutralising this attack.

The significance of the usage of steganography, the technique of hiding secret information in non-secret locations, is that it allows for attacks that are especially difficult for the unsuspecting layman to detect. Everyone is on high alert for malware, suspicious programs, scripts and BAT files, potentially allowing these more discreet attacks to pass under our radar. The main threat these images were said to pose was the poisoning of machine learning datasets. The impact of this would likely be quite minor, such as spoiling a detection algorithm a student is training, as more sophisticated machine learning algorithms will be trained and tested with enough datasets that they would detect an issue and resolve it before deployment. However, another threat identified was covert information dissemination, particularly in the form of illicit content and advertisements. For example, a well made covert advertisement could incorporate the use of these attack images to show some uninteresting content, but when rendered at a particular resolution, provide information on the procurement of illicit substances. There are parallels with cryptography, where only those knowing the correct decryption key can access the information, but the advantage this method has is that in the right configuration, this secret information can be widely but discreetly disseminated.

Finally, a word about the coursework that prompted this discussion and our prevention method proposal; personally, we felt the coursework was a very practical way for us to delve deep into the world of security vulnerabilities and research. We explored many papers we otherwise would not have known even existed – a harsh dose of reality that vulnerabilities and attackers are everywhere. In exploring and studying our selected attack more, we got an insight into the mind of a security researcher, the adversarial mindset and creativity they needed to discover such a vulnerability, and even more so to find countermeasures. It was definitely a challenge to try to replicate the attack, but it forced us to explore and understand the underlying vulnerability to the point where we could even propose how to defend against the attack. We really appreciated the opportunity to be able to see first-hand what level of understanding and detail goes into security research. It definitely complemented the course and its teachings well.

We would like to thank Professor Steven Murdoch for his careful planning of this coursework and indeed the COMP0055 Computer Security II course as a whole, as well as the opportunity to write this post.